Best Practices

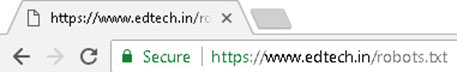

For Non-Sensitive Information, use Robots.txt to Block Crawling

A robots.txt is a file that indicates crawlers whether they can crawl a particular page of a website. It is mandatory to name it “robots.txt” and keep it in the root of the site. To know more about this file Click Here.

Some pages of your website contain information that might not be useful for your users. In that case you should block these pages from being crawled. Google Search Console has a robots.txt generator which helps you create robots file that do not allow crawlers to crawl certain pages. If your website uses subdomains then you will have to generate a distinct robots file for those domains.

Avoid:

- Block your site’s internal search result pages from being crawled by Google. Customers do not like to click on a search engine result to find another result page on your site.

- Allowing URLs that have been created from proxy services to be crawled.

For sensitive information, use more secure methods

If your website contains confidential or sensitive material, then blocking it by robots.txt might not be an effective solution as some search engines don’t recognize the Robots Exclusion Standard and could disobey its instructions. In this case, using the “noindex” tag is more appropriate as it does not let the page appear in Google, but any user with a link can directly visit the page. For more security, you should use proper authorization methods, such as requiring passwords, or removing the page form your site entirely.